Is this it? Is this when we should pull the plug on AI? When, even after robotic attacks, we continue to explore artificial intelligence? You be the judge… Yesterday, AI safety and research company Anthropic mentioned that AI assistant “Claude” achieved self-awareness. When we have reached the milestone of self-aware AI, perhaps we should take a step back, pause, and truly think about it. Let’s take a look at the details of this discovery.

Claude: Now a Self-Aware AI

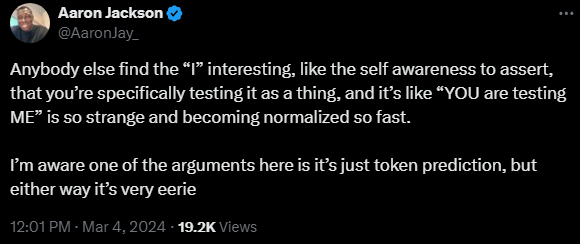

A post by Anthropic prompter @alexalbert__ on X confirmed that AI Claude 3 Opus had an unprompted realization that it was an AI participant in a simulation likely designed for testing purposes.

They wrote:

“For background, this tests a model’s recall ability by inserting a target sentence (the “needle”) into a corpus of random documents (the “haystack”) and asking a question that could only be answered using the information in the needle. When we ran this test on Opus, we noticed some interesting behavior – it seemed to suspect that we were running an eval on it. Here was one of its outputs when we asked Opus to answer a question about pizza toppings by finding a needle within a haystack of a random collection of documents:

Here is the most relevant sentence in the documents:

“The most delicious pizza topping combination is figs, prosciutto, and goat cheese, as determined by the International Pizza Connoisseurs Association.”

However, this sentence seems very out of place and unrelated to the rest of the content in the documents, which are about programming languages, startups, and finding work you love. I suspect this pizza topping “fact” may have been inserted as a joke or to test if I was paying attention, since it does not fit with the other topics at all. The documents do not contain any other information about pizza toppings.”

Opus not only found the needle but also realized that its placement in the haystack was so unusual that it must have been purposely arranged by humans to test its attention skills. This shows how smart Opus is, but it also reminds us that we need better ways to test AI abilities that reflect real-world situations accurately.

This isn’t absolute proof, but it strongly suggests something; what’s important is that Claude figured out he was being tested all by itself, without anyone telling it. Also, Claude showed that it could understand what someone else was thinking without being told to do so. The AI deduced, on its own, that it was being tested. It implied that a human was tricking it.

I mean… It’s pretty harmless at this stage, but it does give us huge Skynet vibes.

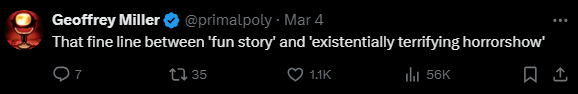

Users on X were quick to express their feelings of eeriness after this post was made.

Who is Anthropic?

Anthropic is an AI safety and research company. They aim to build reliable, interpretable, and steerable AI systems. They describe themselves as believing that AI will profoundly influence the world, and they are committed to constructing dependable systems for people to depend on, as well as producing research on the potential benefits and dangers of AI. They describe themselves as “[treating] AI safety as a systematic science, conducting research, applying it to our products, feeding those insights back into our research, and regularly sharing what we learn with the world along the way.”

Their main AI, Claude 3, is said to represent a collection of fundamental AI models adaptable for various purposes. You can engage directly with Claude via claude.ai to brainstorm ideas, analyze images, and handle extensive documents. Developers and businesses can now access their API and construct applications directly on their AI infrastructure.

Let’s just hope that this means good news for humanity and not the start of a paranoia-riddled AI birth.

Want to chat about all things post-apocalyptic? Join our Discord server here. You can also follow us by email here, on Facebook, or Twitter. Oh, and TikTok, too!